How to Interpret U.S. News College Rankings?

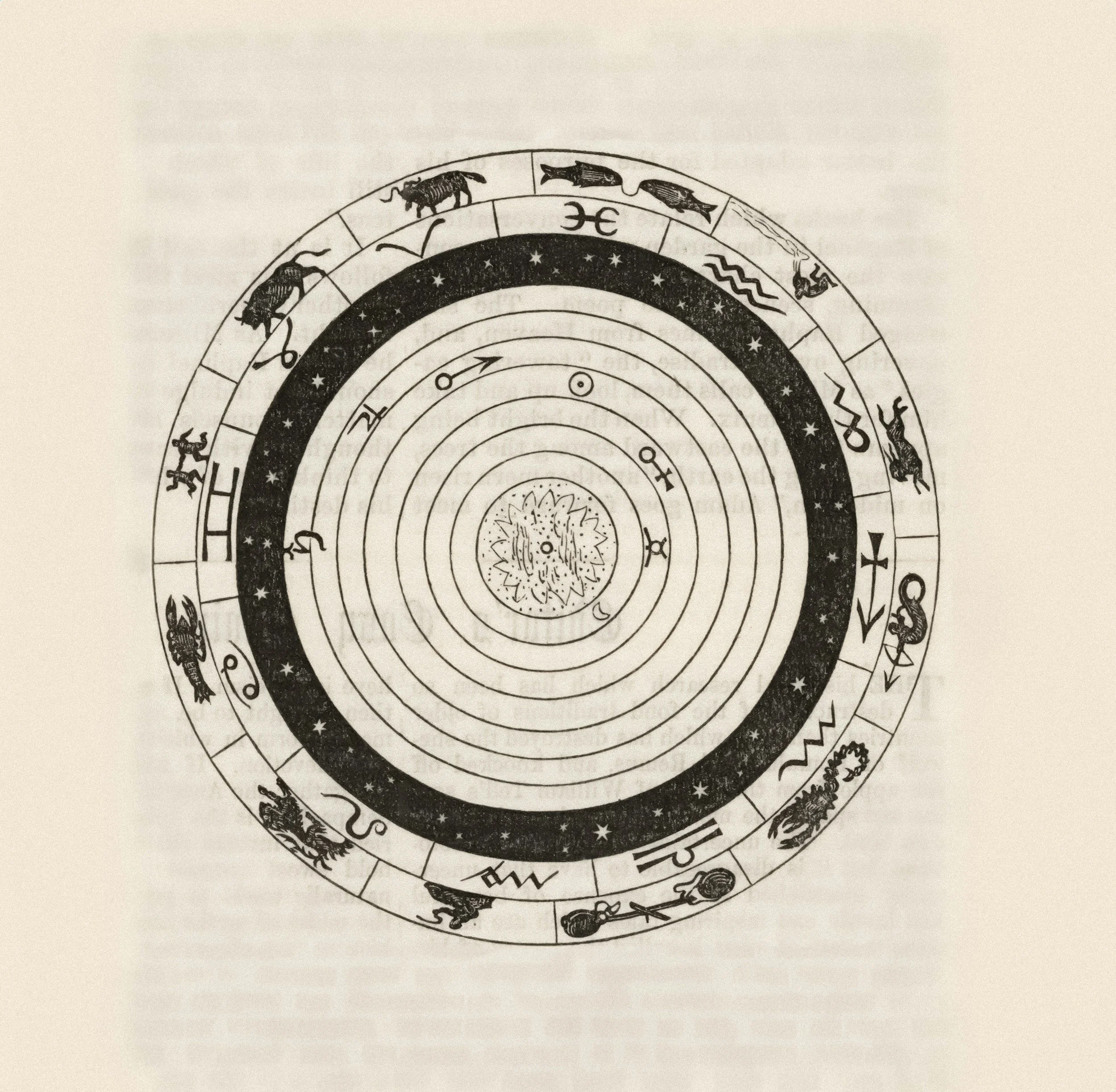

We believe U.S. News rankings are effectively astrology and should be taken with a grain of salt. However, if you wish to leverage those rankings for your or your child's list of colleges, here is how to use them.

For readers who want to understand exactly what U.S. News measures, here's the complete breakdown for National Universities (2026 methodology):

Graduation rates (16%): Completion for first-time, full-time students within 150% of normal time

First-year retention (5%): Persistence from year 1 to year 2

Graduation rate performance (10%): Model-based comparison to predicted rate (noisy and sensitive to modeling choices)

Pell graduation rates (3%): Completion rates for Pell-eligible students

Pell graduation performance (3%): Pell vs. non-Pell completion comparison

First-gen graduation rates (2.5%): Completion rates for first-generation students

First-gen graduation performance (2.5%): First-gen vs. non-first-gen comparison

Borrower debt (5%): Federal loan debt among graduates (excludes private loans, parent borrowing, and non-completers)

Earnings proxy (5%): Graduates earning more than high school graduates, using federal tax data

Peer assessment (20%): Reputation survey among college administrators

Financial resources (8%): Spending per student

Faculty salaries (6%): Average faculty compensation

Student-faculty ratio (3%): Reported ratio (doesn't capture actual class sizes or advising access)

Full-time faculty percentage (2%): Proportion of faculty employed full-time

Test scores (5%): SAT/ACT scores where reported

Research metrics (4%): Publications and citations (National Universities only)

Important methodological note: All indicators are converted to z-scores within the ranking universe, then rescaled so the top school scores 100. This means small raw differences can create large ranking gaps, and vice versa. The rank is an index, not a scale.

How to Actually Use Rankings: The Filter Approach

Use rankings as a starting filter, not a final answer. Here's the responsible workflow:

1. Use rankings to identify schools with strong completion and reasonable debt

If a school ranks highly, it likely has:

High graduation rates for its student population

Strong institutional resources

Low debt burdens among graduates

Decent post-graduation earnings

These are real outcomes worth caring about. A school that graduates 95% of students in four years is doing something right systemically, even if that "something" might be mostly "admitting wealthy, well-prepared students."

2. Verify the data yourself

Don't trust institutional self-reporting blindly. The Columbia University ranking controversy, where discrepancies in reported data led to a $9 million settlement, shows this isn't hypothetical.

Cross-check against:

Common Data Set (CDS): Standardized reporting for admissions, financial aid, class sizes

IPEDS (Integrated Postsecondary Education Data System): Federal reporting on graduation, retention, demographics

College Scorecard: Federal data on net price, debt, earnings by field of study

3. Ask the questions rankings can't answer

Instead of asking "What's the best college?", ask domain-specific questions that actually matter:

Academic Quality: How do students in my major experience instruction? What's the typical class size in upper-division courses? Find this in department course catalogs, CDS sections, and student engagement surveys.

Teaching: Who teaches intro courses in my intended major, faculty or TAs? Check department websites and course schedules.

Support Services: What's the advising ratio? How quickly can students get appointments? Contact student services offices and ask about retention programs.

Mental Health: What's counseling center wait time? What crisis support exists? Check counseling center websites and campus climate surveys.

True Affordability: What's my actual net price, not sticker price? Is aid renewable? Use the net price calculator, College Scorecard, and contact the financial aid office directly.

Equity: How do first-gen and Pell students perform here? What support programs exist? Look at IPEDS subgroup outcomes and campus support programs.

Career Outcomes: What's the NACE-compliant career outcomes rate? What are outcomes by major? Review career center first-destination reports and verify NACE compliance.

Fit: What's daily life like for students like me (commuter, working student, athlete)? Visit campus, interview current students, investigate housing resources.

The "Value-Added" Illusion

U.S. News includes "graduation rate performance,” comparing actual completion to predicted benchmarks. This sounds good in theory: it attempts to control for student inputs.

In practice, research shows these measures are highly sensitive to modeling choices and sampling definitions. Many institutions' "over/under-performance" is not statistically distinguishable from zero once you account for uncertainty. Treat these as extremely coarse signals, not precise measurements.

Better Data Sources for Outcomes

For post-graduation outcomes, ignore the ranking and go directly to:

Immediate placement (within 6 months)

Use NACE (National Association of Colleges and Employers) standards for first-destination outcomes. NACE defines "career outcomes rate" and "knowledge rate" with specific protocols to prevent misleading "placement rate" claims.

Check: Does the school publish NACE-compliant reports? What's the response rate? (Low response rates make data unreliable.)

Medium-run earnings (3-6 years out)

Use College Scorecard field-of-study earnings data. This uses federal tax-linked data, which is far more reliable than institutional self-reporting. You can see earnings by specific major, not just institutional averages.

Long-run mobility

Check the Opportunity Insights Mobility Report Cards (Chetty et al. research). This shows what percentage of students come from each income quintile, what income levels students reach as adults, and true economic mobility rates.

This reveals stratification that rankings obscure. Elite institutions often score high on rankings while serving predominantly wealthy students and providing limited access to low-income students.

The Gaming Problem

High-stakes rankings create incentives for institutions to optimize for what's measured rather than what matters. This isn't hypothetical, it's documented in sociological research on "ranking reactivity."

Schools can (and do):

Reallocate resources toward measured indicators

Change admissions practices to boost selectivity metrics

Adjust how they report data

Focus on activities that improve rankings over activities that improve student experience

Some of these behaviors improve real outcomes. Others are superficial compliance. As a consumer, you can't easily distinguish between the two.

Treating Rankings as Testable Hypotheses

The best interpretive framework: translate a high ranking into a hypothesis, then test it.

Hypothesis: "If this school ranks highly, then it should have strong completion rates, good resources, and decent post-grad outcomes."

Test:

Check IPEDS for graduation rates by subgroup (does my demographic succeed here?)

Verify net price for my income level (can I afford it?)

Look at career outcomes by major (not institutional average)

Assess support services relevant to me (first-gen programs, disability accommodations, etc.)

Check teaching quality proxies (who teaches intro courses, class sizes in my major)

If the school passes these tests and you also like the culture, location, and program offerings, great. If it fails on affordability, support, or fit despite ranking well, the ranking is irrelevant to you.

A Category Mistake to Avoid

Rankings are institution-level. Students experience college at the program level.

The engineering department and the humanities division at the same university can have completely different:

Teaching quality

Advising structures

Career placement rates

Student satisfaction

Resource allocation

The overall institutional rank tells you almost nothing about your specific experience in your specific major.

The Bottom Line

U.S. News rankings identify institutions that:

Graduate students at high rates

Have strong resources (as measured by spending)

Are perceived as prestigious

They don't measure, and cannot measure, teaching quality, student wellbeing, belonging, culture, or whether you specifically will thrive there.

Use rankings as one filter among many. Then do the work to investigate what actually matters: affordability for your family, outcomes in your intended major, support for students like you, teaching quality in your department, and whether the environment fits your needs.

The school ranked #15 might be dramatically better for you than the school ranked #5. Rankings can't tell you that. Only research, visits, and honest assessment of your priorities can.

If you have any questions about the college admissions process, schedule a complimentary consultation with an admissions expert today!